I recently came across the delightful book MapReduce Design Patterns by Donald Miner and Adam Shook. The book is an indispensable addition to the collection of any self-respecting big data professional. It is on par with another favorite of mine RESTful Web Services Cookbook. Both books are perfect examples of the right mix of theory and practice. They deal with difficult big data problems that developers and architects encounter, and provide focused, pragmatic and conceptually sound solutions. Kudos to O’Reilly for providing us with such pearls of wisdom.

Several projects that I’ve been involved with could have sorely used this book. Hungry developers eager to adopt hot new Hadoop technologies initially dazzle business folks with their preliminary efforts. The initial results of monetizing large amounts of passive data makes everyone happy.

Here’s one case I witnessed. In first stage, ad server logs from a dozen machines are pushed to a HDFS cluster, MapReduce boils them down to CSV import files, and these are then loaded into a MySQL “data warehouse”. As ad requests rapidly increase the number of ad servers machines grows to 20 or 30 and the initial architecture starts buckling as the sheer complexity of many moving parts overwhelms the team. Without a proper grounding in big data concepts and frameworks, things fall apart in the face of higher volume and velocity. This is a good example of where quantitative changes result in qualitative ones. In short, the first step is to read MapReduce Design Patterns from cover to cover!

One of the great things about the book is that it covers lower-level patterns such as Numerical Summarization and Counting with Counters but also higher level abstractions such as Metapatterns which are patterns comprised of other patterns such as Job Chaining and Job Merging. You can’t ask for more – there are chapters on Bloom Filtering, Binning, Replicated Joins, etc. Ultimately this would lead us to top-level architecture patterns such as Lambda Architecture – however, this is the topic of a future blog. The final chapter touches upon future directions regarding non-textual formats such as image audio, video.

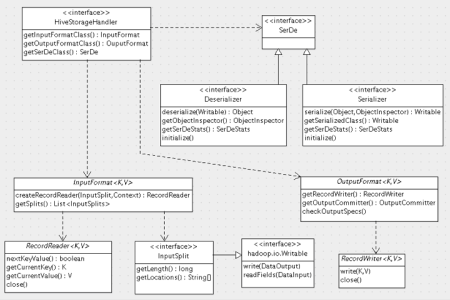

My particular favorite is Chapter 7 Input and Output Patterns as I have recently been trying to hammer out an external table feature for a SQL-on-Hadoop database à la Hive’s StorageHandler. This chapter provides great clarity and insight on the topic. The authors start off by describing the basic underpinnings of key Hadoop interfaces such as InputFormat/RecordReader and the corresponding OutputFormat/RecordWriter from a high-level conceptual perspective. After reading this chapter, you understand the why as well as the how of these key interfaces. The chapter then goes beyond the common file-based formats to explore the more adventurous topic of connecting input and output sources to systems outside Hadoop and HDFS. The chosen example is the rocking NoSQL Redis database. This is a must read for anyone trying to connect Hadoop to external systems.

The one issue I had with the book is the “MapReduce” word in its title. Most of the patterns are not in any way specific to MapReduce. Of course, when the book was written MapReduce was the only game in town, but we now have a new generation of execution engines such as Spark and Tez. For the most part, the patterns are equally applicable to the other execution engines or even to a roll-your-own solution. I would love to see a companion book which describes the patterns with Spark code samples.

Resources:

- MapReduce Design Patterns book – O’Reilly site – Amazon site

- github.com/adamjshook/mapreducepatterns – github site for book

- MapReduce Design Patterns slides – by Donald Miner – Oct. 2013

- Donald Miner blogs at Pivotal

- Adam Shook blogs at Pivotal

- MapReduce Patterns, Algorithms, and Use Cases – highlyscalable – Katsov – Feb. 2012

- Map Reduce Patterns – InfoQ – Feb. 2012